19 Best Hadoop Experts for Big Data Projects

Apache Hadoop’s evolution over the past decade and a half has been driven by a dedicated group of engineers, researchers, and community leaders.

These individuals have not only contributed code to Hadoop’s core, but also founded companies, influenced the community through writing and speaking, and pushed Hadoop to new frontiers at scale. Below is a ranked list of the best currently active Hadoop experts worldwide, based on their open-source contributions, hands-on leadership in startups, technical influence (blogging, speaking), innovations at major tech firms, and even competitive programming accolades where applicable. Each profile highlights their expertise, affiliations, and impact on the Hadoop ecosystem.

- Doug Cutting

- Colin P. McCabe

- Arun C. Murthy

- Aaron Myers

- Wangda Tan

- Owen O’Malley

- Anu Engineer

- Bikas Saha

- Sanjay Radia

- Suresh Srinivas

- Tsz-Wo (Nicholas) Sze

- Todd Lipcon

- Tom White

- Andrew Wang

- Joe Crobak

- Sunil Govindan

- Praveen Polimeni

- Chris Nauroth

- Vinod Kumar Vavilapalli

Now, let’s delve into their profiles and contributions:

Doug Cutting

Nationality: American

Doug is widely known as the “father” of Hadoop.

In 2005, he and Mike Cafarella created Apache Hadoop (named after Doug’s son’s toy elephant) while working on the web crawler project Nutch. Cutting’s vision and advocacy for open-source big data have been instrumental in Hadoop’s success. He formerly worked at Yahoo, where Hadoop was open-sourced and scaled out, and later co-founded Cloudera (the first company to commercialize Hadoop) in 2008. At Cloudera, he serves as Chief Architect, guiding the integration of Hadoop with enterprise data platforms. Doug’s influence extends beyond code – he helped Hadoop become a top-level Apache project and fostered its community.

An Open Source evangelist, he has received an O’Reilly Open Source Award for his contributions.

- Linkedin: Doug Cutting

- X (Twitter): @cutting

- Github: cutting

Colin P. McCabe

Nationality: American

Colin has made substantial contributions to HDFS internals and bridging Hadoop with native code.

At Cloudera, Colin worked on the HDFS team, where he improved the HDFS read/write pipelines and developed features like the persistent memory read cache. One of his notable projects was implementing the HDFS “short-circuit” local read, which allows DataNode to serve data to co-located clients via UNIX domain sockets, bypassing TCP and significantly reducing latency. Colin also led the development of the libhdfs3/C++ HDFS client, enabling high-performance HDFS access from C++ applications and helping projects like Apache Impala and ORC. He contributed to Hadoop’s build system to better support native code compilation.

In Hadoop 3.x, Colin was key in adding the new async HDFS client (C++) and improving erasure-coded file read efficiency. Colin is known for tackling hairy low-level issues: he has fixed race conditions in HDFS, improved the reliability of the journal nodes, and optimized RPC handling.

- Linkedin: Colin McCabe

- Github: cmccabe

Arun C. Murthy

Nationality: Indian

Arun has been a driving force in Hadoop since its early days at Yahoo in 2006. He led the design and development of Hadoop’s next-gen resource manager, YARN (Yet Another Resource Negotiator), which decoupled cluster resource management from MapReduce and enabled Hadoop to run a variety of new workloads.

Arun was a co-founder and Chief Product Officer of Hortonworks, the Hadoop-focused startup formed by Yahoo’s core Hadoop team in 2011. At Hortonworks, and later as CPO at Cloudera after the companies merged, he remained deeply involved in Hadoop’s roadmap. Murthy’s technical contributions (spanning MapReduce, YARN, and ecosystem projects) and his leadership helped enterprise Hadoop adoption worldwide. He has 13+ years of Hadoop experience and often shares his perspective on the state of the platform. In 2019, after leaving Cloudera, Arun took on new challenges (e.g., as CPTO at Scale AI), but he continues to be an influential voice in big data.

- Linkedin: Arun C. Murthy

- X (Twitter): @acmurthy

Aaron Myers

Nationality: American

Aaron has been a key Hadoop committer since 2009. As a software engineer at Cloudera, Aaron co-architected HDFS NameNode High Availability along with Todd Lipcon – he helped implement the quorum-based journal and failover controller that allow a standby NameNode to take over on failure, greatly improving HDFS reliability.

Aaron also made extensive contributions to Hadoop security, working on Hadoop’s Kerberos authentication and token delegation systems. In fact, he co-presented the landmark “Hadoop Security” talk at Hadoop World 2010, laying out how to secure a Hadoop cluster end-to-end. Beyond HDFS, Aaron contributed to Hive and Impala integration with Hadoop and was involved in creating Apache Sentry (a fine-grained authorization system for Hadoop). He is known for his thoughtful code reviews on JIRA (often signing as “ATM”). After Cloudera, Aaron took on new roles (at companies like Airbnb and Airtable), but Hadoop remains one of his core specialties.

- Linkedin: Aaron Myers

- Github: aaronmyers

- Website/Blog: myers.phd

Wangda Tan

Nationality: Chinese

Wangda has focused his contributions on improving YARN’s scheduler and resource allocation mechanisms. Working with Hortonworks and later Cloudera, Wangda led features such as Node Labels (allowing YARN to schedule jobs to specific classes of hardware) and Resource Types (extending YARN to handle resources beyond CPU and memory, like GPUs).

These features, developed through Hadoop JIRAs like YARN-2492, have enabled Hadoop clusters to support emerging workloads (e.g., machine learning with GPU needs). Wangda also developed the YARN web UI improvements and worked on federation of YARN (to link multiple YARN clusters). He is an Apache Hadoop PMC member and has been very active on the project’s mailing lists, often managing release management tasks and writing design docs.

Wangda frequently speaks at community events about YARN’s roadmap and best practices for capacity planning.

- Linkedin: Wangda Tan

- Github: wangdatan

Owen O’Malley

Nationality: American

Owen was the first non-Yahoo committer added to the Hadoop project in 2006 and has been a stalwart of the community ever since. At Yahoo, he served as Hadoop’s architect for MapReduce and security, helping Hadoop achieve world-record benchmarks (he led Yahoo’s team to win the 2008/2009 GraySort big data sorting benchmark).

Owen’s contributions span from core scheduling and execution improvements to implementing robust security (Kerberos authentication) in Hadoop. He later co-founded Hortonworks in 2011, where he as a Technical Fellow continued to guide Hadoop’s development. O’Malley is also the creator of Apache ORC, a popular high-performance columnar storage format for Hadoop, and added ACID transaction support to Apache Hive. Known for his engaging presentations and deep technical knowledge, Owen has been a go-to authority on Hadoop internals.

- Linkedin: Owen O’Malley

- X (Twitter): @owen_omalley

- Github: omalley

Anu Engineer

Nationality: Indian

Anu Engineer (yes, that’s his real surname) has been a prominent Hadoop storage engineer, best known for leading the development of Apache Ozone.

At Hortonworks, Anu recognized the limitations of HDFS for certain workloads and spearheaded Ozone as a new scalable key-value object store within the Hadoop ecosystem. He designed Ozone’s architecture to handle billions of small files and to work efficiently with containerized storage datanodes. Anu also worked on Hadoop’s disk balancing, the HDFS CLI enhancements, and was involved in Hadoop’s support for newer OS/filesystem features. He frequently advocated for Hadoop in the community, speaking at Hadoop Summit about Ozone’s design and the importance of reimagining HDFS for modern hardware.

Under his guidance, Ozone went from a prototype to a full-fledged Hadoop sub-project and then to Apache top-level status. Anu was also involved in Apache HDDS (Hadoop Distributed Data Store) initiatives and contributed to the HDFS RAID (erasure coding) discussions before Ozone. After Hortonworks, he continued to work in cloud storage startups, applying similar principles.

- Linkedin: Anu Engineer

- Github: anuengineer

Bikas Saha

Nationality: Indian

Bikas has been at the forefront of Hadoop’s MapReduce evolution and next-gen processing frameworks. At Yahoo, Bikas worked on Hadoop MapReduce and was instrumental in the early prototyping of YARN (MRv2).

Joining Hortonworks, he became a co-creator and architect of Apache Tez, a DAG execution engine that replaced MapReduce for Hive and Pig, dramatically improving their performance. Through Tez, Bikas showed how Hadoop’s computation layer could be made more flexible and efficient on YARN. Within Hadoop, he contributed to YARN’s resource management and application master design. He also worked on Hive’s LLAP (Low Latency Analytical Processing) which leverages Tez. Bikas has deep roots in Hadoop’s open-source community – he has served on the Apache Tez PMC (and Hadoop PMC) and evangelized Hadoop improvements at many conferences. After Hortonworks, he continued working on large-scale data processing systems, bringing his Hadoop experience into new arenas.

- Linkedin: Bikas Saha

- X (Twitter): @bikassaha

- Github: bikassaha

Sanjay Radia

Nationality: American

Sanjay is a Hadoop pioneer whose expertise lies in distributed file systems. At Yahoo, Sanjay was the chief architect of HDFS (Hadoop Distributed File System) during Hadoop’s formative years.

He designed many of HDFS’s fundamental features, including its namespace management and reliability mechanisms, and also contributed to MapReduce scheduling. In 2011, Sanjay co-founded Hortonworks, joining former Yahoo colleagues to focus on Hadoop’s open-source development. At Hortonworks, he continued as a Senior Hadoop Architect and mentor, influencing HDFS enhancements like federation (scaling NameNode horizontally) and high availability. Sanjay’s career in distributed systems predates Hadoop – he held senior engineering roles at Sun Microsystems and INRIA, developing grid computing and distributed infrastructure software.

- Linkedin: Sanjay Radia

- X (Twitter): @srr

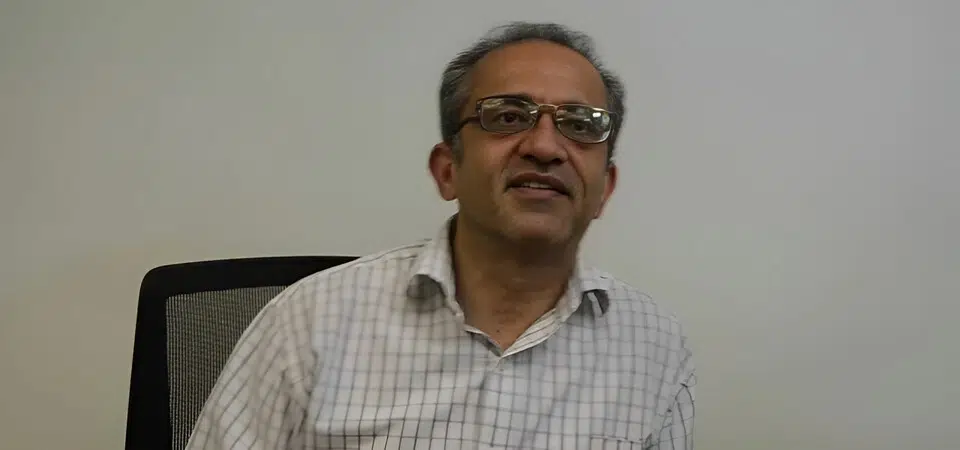

Suresh Srinivas

Nationality: Indian

Suresh is another early Hadoop luminary who helped shape HDFS from the ground up.

A long-time Apache Hadoop PMC member, Suresh designed and developed many key features in HDFS. At Yahoo, he was a core engineer on the HDFS team, contributing to its namespace architecture, replication mechanism, and performance optimizations. Notably, Suresh was instrumental in implementing HDFS’s high-availability NameNode (using shared edit log and failover), eliminating what was previously a single point of failure. In June 2011, he joined fellow Yahoo Hadoop veterans in founding Hortonworks, where he continued as an HDFS architect. Suresh has also driven efforts to adapt Hadoop to new hardware trends (e.g., HDFS caching and tiered storage, as presented at Hadoop Summit).

After Hortonworks, he has taken on new challenges (leading an open-source metadata project), but remains active in the Hadoop community (Apache member, etc.).

- Linkedin: Suresh Srinivas

- X (Twitter): @suresh_m_s

- Github: sureshms

Tsz-Wo (Nicholas) Sze

Nationality: American

Tsz-Wo is known for his deep algorithmic contributions to Hadoop’s core. With a Ph.D. in theoretical computer science, Dr. Sze brought a strong analytical approach to improving Hadoop.

He has been a long-time Apache Hadoop committer and served as the PMC chair of Apache Ratis (the consensus library for Hadoop’s storage) while also being a PMC member of Hadoop and Ozone. At Yahoo in Hadoop’s early days, Nicholas worked on HDFS and MapReduce performance – notably, he helped optimize HDFS’s block placement and replication algorithms for reliability and speed. He also contributed to Hadoop’s record-breaking Terasort benchmarks, fine-tuning the system to handle massive sorts efficiently.

In recent years at Cloudera (as a Principal Engineer), Nicholas has focused on consensus and storage, playing a key role in the development of Hadoop’s sub-project Ozone (a new object store) and ensuring integration with core Hadoop. He frequently shares knowledge in the community, including at Apache events.

- Linkedin: Tsz-Wo Nicholas Sze

- X (Twitter): @szetszwo

- Github: szetszwo

Todd Lipcon

Nationality: American

Todd made a name for himself at Cloudera as one of the most Hadoop contributors of the early 2010s. Described by peers as a “top engineer and heavy hitter in the Hadoop ecosystem”, Todd’s contributions span HDFS, HBase, and general Hadoop reliability.

He led the development of HDFS High Availability and the introduction of the Quorum Journal Manager, which finally eliminated Hadoop’s single point of failure (the NameNode). He also worked on HDFS durability (synchronization/append support) and performance patches. Todd is a committer and PMC member on Apache HBase as well, where he improved HBase’s resilience and consistency. Notably, he founded Apache Kudu, a storage system for fast analytics on Hadoop (written in C++), leading its design and implementation as its tech lead at Cloudera.

In 2015, Todd moved on to co-found a new company (Tablet) and later joined Google, where he applies his distributed systems expertise on Google Spanner – yet he remains involved in Apache projects (he still holds Hadoop PMC membership and contributes to open source).

- Linkedin: Todd Lipcon

- X (Twitter): @tlipcon

- Github: toddlipcon

Tom White

The best way to understand a system is to build something with it.

Nationality: British

Tom is best known as the author of “Hadoop: The Definitive Guide”, the O’Reilly book that introduced countless engineers to Hadoop.

First published in 2009 (updated through 4th edition), this book earned Tom a reputation as Hadoop’s explainer-in-chief. But Tom is also an accomplished engineer: he started contributing to Hadoop in 2006 and became an Apache Hadoop committer, working on areas like metrics, build infrastructure, and MapReduce. He was part of the Hadoop team at Cloudera, then later joined Microsoft’s Azure team and Google Cloud, helping integrate Hadoop with cloud services. Tom also worked on Apache Whirr (automating Hadoop cluster deployment) and contributed to Apache Avro. His clear writing and examples (such as the “weather data” example in his book) made Hadoop accessible to a broader audience.

White is an Apache member and remains active in open source, recently focusing on data science tooling on cloud.

- X (Twitter): @tom_e_white

- Github: tomwhite

- Website/Blog: tom-e-white.com

Andrew Wang

Nationality: American

Andrew has been a highly active Hadoop committer who worked on a wide array of HDFS improvements and new features.

At Cloudera, Andrew was deeply involved in the HDFS Erasure Coding project (collaborating with Zhe Zhang), the design of in-memory caching (HDFS Datanode caching) for low-latency reads, and numerous fixes to NameNode performance. He frequently acted as release manager for Hadoop 2.x versions and championed quality and stability in releases. Andrew also contributed to YARN (containerization and scheduling) and to Hadoop’s metrics and monitoring. He is known in the community for writing clear design documents and for responding thoughtfully to user issues on mailing lists.

In addition, Andrew evangelized Hadoop improvements at conferences – for example, presenting on HDFS innovations at Hadoop Summit. In recent years, he joined Google Cloud’s big data team, influencing cloud-native data processing (though he continues participating in Apache projects).

- Linkedin: Andrew Wang

- X (Twitter): @umbrant

Joe Crobak

Nationality: American

Joe may not be a code contributor, but his impact on the Hadoop world has been through knowledge sharing and community building.

Joe is the creator and curator of Hadoop Weekly (now known as Data Engineering Weekly), a newsletter that for years provided a digest of the top news and technical articles in the Hadoop ecosystem. A “long-time Hadooper” who worked as an engineer at Foursquare and later at LinkedIn, Joe started the newsletter in 2013 to help the community keep up with the rapid pace of developments. Each week, thousands of Hadoop developers, data engineers, and enthusiasts read his summaries of blog posts, release notes, and use-case studies. Joe’s ability to sift through noise and highlight important updates made the newsletter an invaluable resource – effectively connecting the global Hadoop community and spreading best practices. In addition to the newsletter, Joe has been active on Twitter and blogs, commenting on Hadoop trends and tools.

- Linkedin: Joe Crobak

- X (Twitter): @joecrobak

- Github: jcrobak

- Website/Blog: crobak.org

Sunil Govindan

Nationality: Indian

Sunil is an Apache Hadoop committer who played a major role in the Hadoop 3.x line, including serving as the Release Manager for Apache Hadoop 3.2.0.

Based in India and working with Hortonworks/Cloudera, Sunil has contributed significantly to YARN enhancements. He led development of features like YARN Native Services (support for long-running services on YARN) and in-place container upgrades, which allow running services to be updated without full restarts. Sunil also worked on Node Attributes in YARN (together with Karthik Kambatla) and improvements to the YARN Capacity Scheduler for better resource fairness. In HDFS, Sunil contributed the Storage Policy Satisfier, a daemon that automatically moves HDFS blocks between storage tiers according to policies (e.g., cold data to HDD, hot data to SSD).

As 3.2.0 release manager, he coordinated over 1,000 fixes and features, earning praise for guiding one of Hadoop’s “biggest releases”. Sunil frequently shares his experience in community forums and has mentored new contributors in the Hadoop project.

- Linkedin: Sunil Govindan

- Github: sunilgovind

Praveen Polimeni

Nationality: Indian

Praveen is a data engineer specializing in big data solutions, with extensive experience in Hadoop ecosystem technologies and cluster management.

In his article “Snowflake vs Hadoop: A Comprehensive Comparison of Data Warehousing and Big Data Processing Platforms“, he explores the architectural, operational, and use-case differences between the two platforms, highlighting Hadoop’s strengths in distributed storage and batch processing. Throughout his career, he has designed and optimized large-scale data pipelines, implemented data ingestion and processing solutions using Hadoop, and fine-tuned clusters for Apache Spark applications.

- Linkedin: Praveen Polimeni

Chris Nauroth

Nationality: American

Chris made unique contributions by bringing Hadoop compatibility to Windows and enhancing Hadoop’s platform support. As a software engineer at Hortonworks, Chris spearheaded efforts to ensure Hadoop could run natively on Windows Server (adding support for Windows filesystems, path handling, and integration with Active Directory).

He was the release lead for Hadoop 2.2.0 – the first stable Hadoop on Windows release. Chris also worked on HDFS ACLs (Access Control Lists) to provide finer-grained file permissions beyond POSIX, contributing to Hadoop security. After Hortonworks, he continued his Hadoop work at Google Cloud, helping to integrate open-source Hadoop with Google’s Dataproc service. He remains an Apache Hadoop PMC member and Apache Software Foundation member. On social media and StackOverflow, Chris often helps users troubleshoot Hadoop issues, especially those related to Windows or cloud deployments.

- Linkedin: Chris Nauroth

- X (Twitter): @cnauroth

- Github: cnauroth

Vinod Kumar Vavilapalli

Nationality: Indian

Vinod is one of the key engineers behind Apache Hadoop YARN and has remained closely involved with its evolution as a Hadoop PMC member. He co-authored the widely cited YARN paper that described how Hadoop separated resource management from MapReduce, which opened the door to running many different distributed workloads on the same cluster.

In recent years, he has continued to publish deep technical work on YARN scheduling and resource management, including writing about YuniKorn, a scheduler designed to unify scheduling across YARN and Kubernetes. He has also been active in Apache release and governance discussions through the Hadoop project’s public mailing lists.

- Linkedin: Vinod Kumar Vavilapalli

- X (Twitter): @Tshooter

Wrap Up

These experts represent exceptional talent, making them extremely challenging to headhunt. However, there are thousands of other highly skilled IT professionals available to hire with our help. Contact us, and we will be happy to discuss your hiring needs.

Note: We’ve dedicated significant time and effort to creating and verifying this curated list of top talent. However, if you believe a correction or addition is needed, feel free to reach out. We’ll gladly review and update the page.

Frequently Asked Questions

Hadoop engineers are still in demand, though demand has shifted as newer big data tools like Spark and cloud-native platforms gain traction. Many organizations continue to maintain Hadoop-based systems.

Hadoop consultants typically charge between $60 and $120 per hour, depending on their experience and the complexity of the project.

A strong Hadoop expert should have hands-on experience with HDFS, MapReduce, Hive, Pig, and ecosystem tools such as HBase or Oozie, along with knowledge of data architecture and cluster management.

You can hire Hadoop specialists through platforms like Upwork, Toptal, and Clutch, or by working with IT consulting firms experienced in big data solutions.

Companies such as Yahoo, Facebook, LinkedIn, Netflix, and Adobe have used Hadoop to manage and process large-scale data workloads.