AI for Coding: Benefits, Challenges, Tools and Future

Artificial Intelligence (AI) is rapidly reshaping software development, offering faster cycles, better code quality and lower costs. But it also brings risks like unreliable suggestions and legal or ethical concerns. ↓

This research provides an in-depth look at how AI is being used for coding in professional software engineering contexts, covering its benefits, challenges, the current landscape of AI coding tools, real-world use cases, ethical/regulatory considerations, and market trends shaping the future.

Table of Contents

- Benefits of Using AI

- Pitfalls and Challenges

- AI Coding Tools and Platforms

- Case Study

- Ethical, Legal, and Regulatory Considerations

- Market Trends and Future Outlook

- Conclusion

- Sources

Benefits of Using AI in Software Development

Boosted Productivity and Speed

AI coding assistants can significantly speed up routine programming tasks. Studies by GitHub found that developers solved problems up to 55% faster when using an AI pair programmer, thanks to autocompletion and code generation that expedites boilerplate coding.

In practice, this means a dev might finish in hours what previously took a day. Surveys confirm productivity as the top perceived benefit – one poll found 33% of developers cite increased coding speed/productivity as their primary motive for using AI tools. By offloading tedious tasks (e.g. writing repetitive code or syntax), AI allows engineers to focus on higher-level logic and creative problem-solving. Many developers report feeling less frustrated and more “in the flow” when mundane code is handled by AI, which can improve job satisfaction.

👉 Supercharge Your Team with AI-Savvy Talent. Contact Now!

Improved Code Quality (Potentially)

When used properly, AI assistants can help reduce certain errors and improve code consistency. For example, they can suggest best-practice patterns or common fixes (such as edgecase checks) based on learning from vast codebases.

GitHub’s internal studies claimed that Copilot users saw improvements in some code-quality metrics and faster pull-request merge times. AI tools can also increase test coverage by generating unit tests or encouraging developers to write more tests – engineers say AI makes experimentation and testing easier, leading to more robust code.

However, it’s worth noting that the impact on quality is contoversial. Still, by catching simple mistakes (like syntax errors or forgotten null checks) and providing instant documentation or examples, AI can act as a real-time code reviewer to augment quality control.

Cost Efficiency and Developer Throughput

Faster development means lower labor costs per feature delivered. Companies adopting generative AI in software engineering have begun to realize tangible efficiency gains – 10–15% of total development time saved on average.

Every hour an AI assistant saves a developer (by writing boilerplate or avoiding bugs) is an hour that can be redirected to other tasks, effectively increasing team throughput without extra headcount. Some early adopters report substantial ROI. For example, Microsoft estimates that GitHub Copilot’s suggestions now compose up to 46% of the code in projects where it’s enabled. This productivity boost can translate into shorter release cycles and cost savings, especially on large projects.

As AI capabilities improve, experts project even larger efficiency gains – potentially 30% or more with full integration into workflows.

Innovation and Faster Prototyping

By handling menial coding tasks, AI frees developers to spend more time on design, architecture, and innovative solutions. It lowers the barrier to experimentation – developers can prototype ideas rapidly by asking an AI to generate sample code or scaffolds.

This encourages exploring multiple approaches with minimal effort. In surveys, ~25% of developers said a key reason they use AI tools is to speed up learning new technologies. For instance, an engineer can ask a chatbot to generate a snippet in an unfamiliar framework, accelerating the learning curve. AI assistants also function as on-demand mentors or “rubber ducks”, giving hints and explanations that spark creative problem-solving.

Thomas Dohmke.

As GitHub’s CEO put it, in the near future developers will focus on the “20% of code” that is truly unique and creative, while AI generates the 80% of generic code – not replacing developers, but empowering them to innovate faster. Early evidence backs this up: developers report that AI helpers let them try more ambitious refactors or features since the grunt work is reduced, fostering a more innovative engineering culture.

Enhanced Dev Experience

Beyond raw productivity, AI can improve the developer experience in softer ways. For example, AI-based documentation tools can instantly explain a code snippet or suggest comments, making codebases more understandable for teams.

Developers using AI assistants often describe feeling more confident to tackle unfamiliar code – essentially having an “AI pair programmer” on call. In a GitHub survey, 88% of developers using Copilot said it made them more productive and 77% felt it helped them focus on more satisfying work. By reducing drudgery and providing a safety net, AI can increase morale and reduce burnout. Teams adopting AI have noted higher overall velocity and developer happiness, as mundane tasks no longer bog down the day.

Pitfalls and Challenges of AI-Assisted Coding

Incorrect or Non-Contextual Code Suggestions

AI codegens can and do make mistakes. These models lack true understanding of a project’s intent – they predict likely code based on training data, which means suggestions that look plausible but are wrong or inapplicable.

Developers have found that AI helpers sometimes insert subtle bugs or inefficient logic because they can’t fully grasp the specific requirements or context. As one AI expert warned, “It [AI] runs into lots of problems around whether or not the code is applicable” to the task at hand. For example, an assistant might suggest using a data structure that doesn’t fit the scale of your application or call an API incorrectly. Over-reliance without scrutiny can lead to functional issues or runtime errors. This challenge extends to AI “hallucinations”, where the tool invents nonexistent functions or uses outdated APIs – something developers must constantly guard against.

In short, AI is no replacement for human logic: every suggestion needs review for correctness and relevance, which adds overhead.

Insecure or Vulnerable Code Generation

AI models trained on public code may suggest outdated or unsafe coding practices.

Security flaws are a known risk. Studies have shown that GCopilot and similar tools can produce code with serious security vulnerabilities, such as SQL injection flaws, use of weak cryptography, or exposure of secrets. The training data often includes insecure code (e.g. hard-coded passwords, deprecated functions) that the AI can mimic if prompted similarly. For example, an AI might suggest an authentication snippet using MD5 hashing or an older encryption scheme because it appeared frequently in its training set. If a developer blindly accepts these suggestions, they could introduce exploits into the code.

Furthermore, AI lacks contextual judgment about security – it doesn’t understand which vulnerabilities are critical. There’s also the risk of “poisoned” training data: researchers warn that attackers could intentionally plant malicious code in public repos that an AI later regurgitates as a helpful snippet. In one hypothetical scenario, an AI might consistently suggest a function containing a hidden backdoor because it learned it from a poisoned example.

All these issues mean developers must apply strict security review to AI-generated code. Best practices include running security scanners on AI contributions, treating AI suggestions as untrusted by default, and educating developers to recognize insecure patterns. Until models are more security-aware, this remains a serious pitfall.

Code Quality and Maintainability Concerns

Initial research indicates that indiscriminate use of AI code generation can degrade long-term code maintainability. AI tends to favor adding new code rather than editing or deleting existing code, which can bloat codebases with redundant sections.

A comprehensive study by GitClear (an analytics firm) revealed “disconcerting trends in maintainability” since AI coding assistants gained popularity. Notably, code churn – the rate at which recently-added code is revised or reverted – is projected to double (to ~7% from ~3.3%) in the “AI era” compared to pre-AI baselines. In other words, AI is generating a lot of new code that gets rewritten shortly after, signaling wasted effort and unstable quality. The study also found a higher proportion of “copy-pasted” code versus refactored or improved code, suggesting developers may accept AI suggestions at face value even if the code isn’t ideally integrated into the project. This leads to a tangle of technical debt.

“Current AI assistants are very good at adding code, but they can cause ‘AI-induced’ technical debt”. – Bill Harding, GitClear’s founder

Code added in a hurry by AI may burden teams with more maintenance work later. There are also reports of AI encouraging less cleanup – for instance, a decrease in code being moved or refactored into better designs. All of this can make the codebase harder to understand and evolve over time.

Developer Over-Reliance and Skill Erosion

There is a growing concern that heavy reliance on AI assistants could deskill the engineering workforce over time. If new devs come to lean on autocomplete for every other line of code, they might never learn the fundamentals deeply or develop the problem-solving attitude that comes from writing code “the hard way”.

“Over-reliance or blind trust [in AI] will lead to issues”

Indeed, AI can produce a plausible solution without the developer truly understanding it, which impedes learning. In educational settings and for junior engineers, this is a double-edged sword – the AI can teach by example, but it can also become a crutch. Some experienced programmers worry that core skills like debugging, algorithmic thinking, and optimizing code could atrophy if people only learn “to prompt the AI” instead of coding themselves.

Another manifestation of over-reliance is mismatched trust: developers might become complacent and accept suggestions without critical analysis (especially if the AI’s output usually looks correct). Given that only 3% of devs highly trust AI accuracy, most are aware of this, yet even a “somewhat trusted” suggestion might slip through errors.

To mitigate these risks, organizations are encouraging a “copilot, not autopilot” mentality – AI should be treated as an intern or assistant whose work must be reviewed.

Organizational Resistance and Integration Challenges

Not every company is eager to jump on the AI coding bandwagon. There can be internal resistance from developers and management alike.

Reasons include skepticism about the quality of AI-generated code, concerns about privacy/IP (addressed below), or simply cultural inertia.

Some seasoned engineers are uncomfortable with AI suggestions interfering in their coding process or worry that it might encourage bad habits. Additionally, measuring the true ROI of AI assistance can be tricky – productivity gains are real but can be hard to quantify beyond anecdotal success stories. This can make it harder for engineering leaders to justify the cost of enterprise AI tool subscriptions or the time investment to train teams on new workflows.

Integration into existing dev environments is another challenge: AI tools need to mesh with source control, code review processes, CI/CD pipelines, etc. Companies may face a learning curve figuring out the right “mix” of AI into their development lifecycle (for example, when to allow AI-generated code and how to code-review it).

There’s also the issue of tool sprawl – with many AI tools emerging, deciding which to standardize on (if any) can be daunting.

Change management and developer training are therefore critical when introducing AI into a professional workflow. Some organizations have taken a phased approach: piloting AI assistants on non-critical projects, gathering feedback, and developing guidelines (like coding standards for AI-written code) before wider rollout.

Data Privacy and Confidentiality

Many companies – especially those with proprietary codebases – are wary of tools like ChatGPT or Copilot because they involve transmitting code to third-party servers.

Even if the service provider claims not to store or train on your prompts, the perceived risk is significant. As a result, some businesses have outright banned or restricted the use of such tools in their development teams until on-premises or self-hosted solutions are available. For instance, financial and healthcare firms, bound by strict data regulations, have been cautious about any workflow that could expose source code or customer data to an AI service.

“Your organization may not want its code or development practices to be analyzed or stored by GitHub [or an AI provider], even if it is for improving AI performance” – Splunk

Privacy laws also come into play: jurisdictions may consider code (or comments) as sensitive data.

Nonetheless, organizations must carefully vet how an AI tool handles their code: does it log queries? Who can access those logs? Are there content filters (to prevent secrets like passwords from being suggested)? The secure adoption of AI in coding may require waiting for on-prem solutions or using open-source AI models that run locally, especially for highly sensitive projects. This remains a key barrier for many enterprises.

Overview of Current AI Coding Tools and Platforms

The ecosystem of AI coding tools has expanded rapidly. There are dozens of AI-driven devtools on the market (and in open source), which can be grouped into a few broad categories based on their primary functionality.

AI Code Suggestion & Autocompletion Tools

These are the “AI pair programmers” that assist with writing code. They integrate with code editors or IDEs to suggest the next line or block of code, given the current context (and sometimes a natural language comment as prompt).

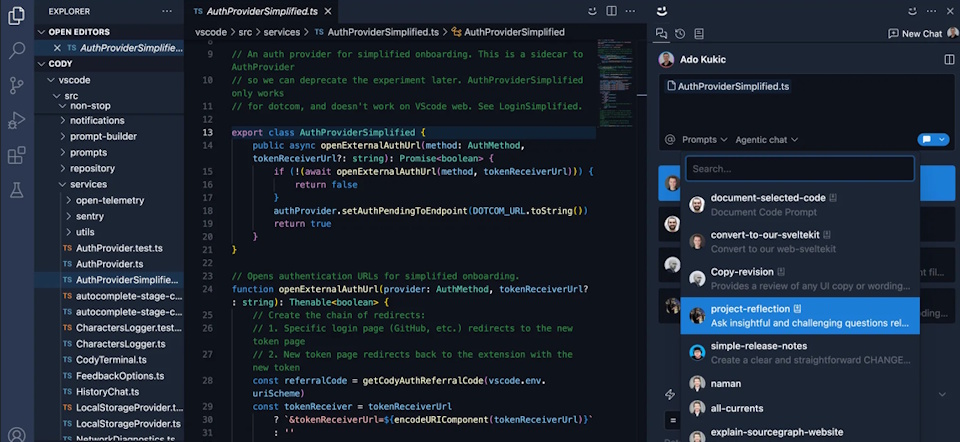

GitHub Copilot

Coding with an AI pair programmer: Getting started with GitHub Copilot.

Powered by OpenAI’s Codex (and now GPT), Copilot is an extension for editors like VS Code, Visual Studio, JetBrains IDEs, etc. It uses the context of the file and any comments to suggest code in real-time.

Copilot supports dozens of languages and is general-purpose. It has become hugely popular – over 1M developers are paying subscribers as of 2024, and it’s used in more than 50K organizations (including 1/3 of Fortune 500 companies).

- Strengths: very advanced model (with a vast training on GitHub code), seamless IDE integration, and a growing feature set (Copilot Chat, voice-based coding, etc.).

- Weaknesses: requires cloud access (no offline), possible privacy/licensing concerns, and occasional off-base suggestions for niche contexts.

OpenAI ChatGPT (and similar AI chatbots)

While not an IDE plugin by default, ChatGPT has become a coding assistant in its own right. Developers use Chat via a web interface or API to generate code snippets, get explanations, or even have entire debugging conversations. In Stack Overflow’s survey, ChatGPT was the most commonly used AI tool by developers (used by 83% of those using AI), even more than Copilot.

- Strengths: extremely powerful language model that can handle both code and natural language Q&A, great for brainstorming and getting help on algorithms or obscure errors. It’s also multi-purpose (can produce documentation, regex, SQL queries, etc.).

- Weaknesses: not directly integrated into the coding environment (though plugins exist), and responses can be hit-or-miss if the prompt is unclear.

Also, like Copilot, the public version shares the data with OpenAI (privacy concern), but Open AI offers private instances for enterprise.

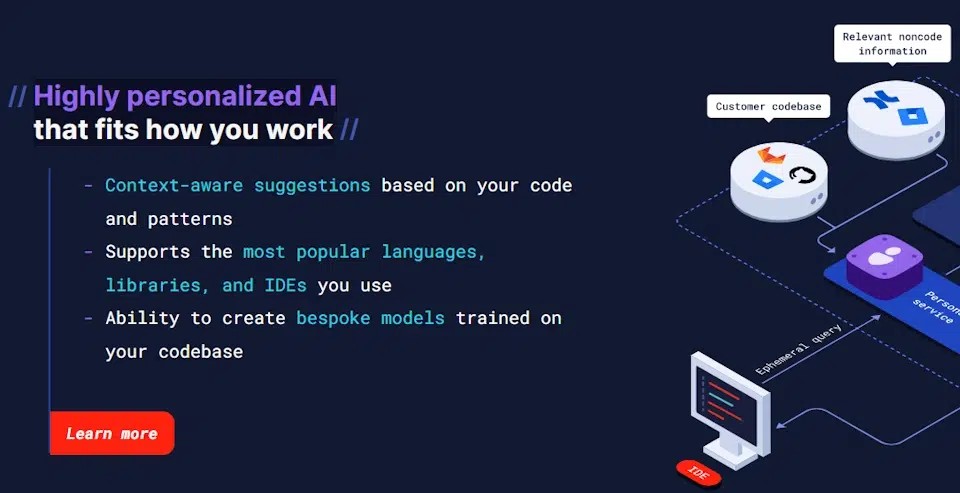

Tabnine

An AI code completion tool that pre-dates Copilot (and one of the first to use deep learning for code). Tabnine integrates with many IDEs and offers both cloud and self-hosted options, appealing to enterprises needing privacy.

It uses its own models (trained on permissively licensed open-source code to avoid IP issues) and provides suggestions as you type, similarly to Copilot. One of Tabnine’s selling points is lightweight, fast suggestions and the ability to run fully offline or on a private server. It supports many languages. According to the vendor, Tabnine has over 1 million monthly users and typically automates 30–50% of code for those users.

- Strengths: privacy/control, can be tailored with custom models per team, and supports a wide range of IDEs.

- Weaknesses: some users find its suggestions less advanced than Copilot’s (especially for complex logic), since its model might be smaller to allow on-premises deployment.

Amazon CodeWhisperer

Amazon’s answer to Copilot, integrated with AWS tooling. It’s an AI code companion that works in IDEs (VS Code, JetBrains, etc.) and is optimized for AWS APIs and workloads. For example, CodeWhisperer is very handy when writing code that interacts with AWS services (S3, Lambda, EC2, etc.), as it has been trained on Amazon’s documentation and codebases.

A standout feature of CodeWhisperer is its emphasis on security and reference tracking: it will flag any code suggestion that closely matches known open-source code and provide the source reference, so the developer can review licensing. It also has built-in scanning for hard-coded secrets or vulnerabilities in generated code. Amazon made CodeWhisperer free for individual developers, encouraging adoption.

- Strengths: strong for AWS cloud projects, helpful comments/documentation suggestions, and the reference check for licensing is a unique plus in enterprise settings.

- Weaknesses: supports fewer languages than Copilot as of now, and outside of AWS-specific scenarios its suggestions are more basic. It’s newer, so its uptake is smaller (though growing).

Other code assistants

There are many others, including Windsurf (formerly Codeium), Replit Ghostwriter (built into Replit’s online IDE, designed for quick prototyping and learning), Sourcegraph Cody (more on that later, as it’s specialized for context-aware answers), IBM watsonx Code Assistant.

Each has varying degrees of capability – but broadly, all these are in the same category of predicting the next chunk of code. They differ in supported IDEs, pricing (some are free or open-source, others subscription), model size, and focus areas.

AI-Assisted Code Refactoring and Optimization

Another emerging category is tools that help improve or transform existing code. Instead of just writing new code, these AI tools can analyze code and suggest refactorings, optimizations, or even translate code between languages/frameworks:

Automated Refactor Suggestions

Modern IDEs have long had refactor suggestions (e.g. “extract method”, “rename variable”), but AI takes this further.

For instance, Amazon CodeGuru Reviewer use ML to propose more complex changes – like refactoring a chunk of code to be more efficient or idiomatic. They might detect code that can be simplified (e.g. “this loop can be replaced with a stream operation”) or suggest using a different algorithm that performs better.

These tools often integrate with code review: they run analysis on pull requests and comment with AI-generated improvement suggestions. While not yet as mainstream as code generation, such refactoring assistants are growing. Developers can also leverage general AI (ChatGPT, etc.) by prompting it with “refactor this function for readability”.

Code Translation and Modernization

AI can help port code from one programming language to another, or upgrade legacy code to modern practices.

For example, an AI model can translate a Java class to C#, or convert Python 2 code to Python 3, taking care of syntax differences. Tools in this space include experimental features from AWS (like migrating .NET Framework code to .NETCORE using AI) and community projects using GPT to modernize COBOL or old Java code.

Although results still need manual verification, this can speed up large-scale migrations. Even SO’s OverflowAI initiative hints at assisting developers in adapting code across tech stacks.

Performance Tuning

Some AI tools specialize in analyzing code for performance issues.

Amazon CodeGuru Profiler uses ML to find hotspots and suggest optimizations (not generative AI per se, but an AI-driven analysis). We might soon see generative models that not only identify a slow piece of code but also rewrite it in a more efficient way. Imagine an AI suggesting a more efficient data structure or parallelizing a computation – this is on the horizon of AI-assisted development.

It’s worth noting that general AI coding assistants (Copilot, ChatGPT) can also be used for refactoring tasks when prompted appropriately.

AI in Testing and Bug Detection

QA is a critical part of software development and AI is making inroads here as well.

Test Case Generation

Writing unit tests and integration tests is time-consuming. AI can help by generating test cases given a function’s code or specification.

For example, Diffblue Cover is an AI tool (focused on Java) that automatically writes JUnit tests for your code – it examines the code paths and creates tests to cover them. Similarly, QODO has an “AI Testgen” that reads a JavaScript/Python function and suggests possible tests (and even property-based tests) to validate its behavior.

These tools aim to improve coverage and catch edge cases that developers might overlook. They can save QA teams a lot of effort in writing boilerplate test code (though human review is needed to ensure the tests make sense).

Automatic Bug Fixing

Experimental AI systems can not only find bugs but attempt to fix them.

For instance, researchers at Meta created SapFix, an AI that could generate fixes for certain bugs and propose them to human engineers. More accessibly, a developer can paste an error message or a buggy code snippet into ChatGPT and often get a suggested fix or at least an explanation.

There are also IDE plugins (some leveraging GPT) that act like an AI rubber-duck debugger – you highlight a piece of code and ask “why might this be failing?”, and the AI will analyze it. While these are not foolproof, they can significantly speed up debugging by pointing developers in the right direction or identifying problematic code.

Static Analysis with AI

Traditional static analysis (linting, vulnerability scanning) is being augmented by machine learning.

Snyk Code, for example, uses an ML-based engine (from the DeepCode acquisition) to detect security issues and code smells in code. It’s not generative (doesn’t write code), but it learns from millions of code examples what patterns are likely bugs. The advantage of AI here is fewer false positives and the ability to catch more complex issues. We can expect future static analysis tools to integrate generative capabilities – e.g., not only flag a potential issue but also suggest a fix (in natural language or code patch form).

Documentation and Code Explanation Tools

Inline Documentation & Comments

AI can generate docstrings, code comments, and even entire README sections by analyzing code. For example, a developer can ask ChatGPT, “Explain what this function does”, and get a decent summary that can be refined into documentation.

Tools like GitHub Copilot have an experimental feature where you write a comment // function to sort list of users by name and Copilot will generate the function code – conversely, you can select a chunk of code and ask Copilot to create a comment or docstring for it. This helps in maintaining good documentation practices with minimal effort. It’s particularly useful for complex logic that may not be immediately clear; the AI can draft an explanation which the developer can then tweak for accuracy.

Knowledge Base Q&A

Large organizations have tons of internal code and APIs. AI-powered search tools like Sourcegraph Cody use embeddings (vectorized representations of code) to let developers query their entire codebase in natural language. For instance, “Where in our repo do we parse the user’s timezone preference?” might yield an answer pointing to a specific function, with the relevant code snippet.

Cody can also explain code by combining repository context with a language model – acting like an always-updated technical assistant that knows your codebase. This is tremendously helpful for onboarding new developers or for engineers navigating a huge legacy project. It’s like having StackOverflow, but specifically for your company’s code. Early adopters report big time savings in finding answers that would otherwise require digging through documentation or asking around.

User Documentation Generation

Some teams use AI to generate end-user documentation, API reference docs, or even release notes from code changes. Given a code diff or a set of API definitions, an AI can draft human-readable descriptions. This can ensure documentation stays in sync with the code. For example, OpenAI’s models have been used to convert OpenAPI specifications into coherent API documentation paragraphs.

Similarly, if an app’s behavior changes, an AI might help compose the “What’s New” notes by analyzing commit messages and code diffs. While these still need editorial oversight, they significantly reduce the grunt work of writing documentation from scratch.

Other Emerging AI Developer Tools

DevOps and Configuration AI

Tools like Harness are introducing AI copilots for DevOps – for example, generating CI/CD pipeline configurations, Terraform scripts, or Kubernetes YAML by describing the infrastructure in plain English. This helps bridge gaps for developers who may not be experts in these declarative configs. AI can also analyze build logs or monitoring alerts and suggest fixes (like “Your container is crashing due to X, try increasing memory limit”).

AI Coding Agents

A forward-looking area is autonomous coding “agents” that can handle higher-level tasks.

Early experiments (e.g. by startups like MagicDev or Cognition Labs) involve an AI agent that can take a feature request (like “implement a CLI for X with these commands”) and then generate multiple files, coordinate tests, and iterate – essentially aiming to be a junior developer that can make small software products with minimal guidance. These are in very early stages and often require a human in the loop to validate each step. However, the concept hints at a future where AI could handle more of the software development lifecycle (from planning to coding to testing) in a loop, not just single-step code suggestions.

Low-Code/No-Code with AI

While not the focus for professional developers, it’s worth noting that many low-code platforms are embedding generative AI to allow natural language specifications.

For example, Microsoft’s Power Apps and Google’s AppSheet have begun integrating GPT-based assistants so a user can say “Create an app to track inventory with fields X, Y, Z” and get a starting point app. Professional devs might interface with these when quick internal tools are needed, or at least need to ensure governance around them.

Case Study – DevWorkflow Transformation

HPE (Hewlett Packard Enterprise) reported on using an AI assistant to automate code reviews for one of their large software products.

The AI was fine-tuned on HPE’s coding standards and past code review comments. When a new commit is submitted, the AI reviews it and comments on things like missing documentation, potential null-pointer issues, and divergence from style guidelines. Developers then address these before the human review phase, making human reviews faster and more focused on design rather than syntax or trivial issues.

HPE noted a reduction in review turnaround time and higher consistency in code quality for that project.

Ethical, Legal, and Regulatory Considerations

Intellectual Property (IP) and Licensing

Perhaps the thorniest issue is: who owns the code generated by AI and is it infringing on someone else’s IP?

AI models like OpenAI’s Codex were trained on billions of lines of source code, much of it open-source with various licenses. This has led to concerns that AI assistants might reproduce copyrighted code without proper attribution or license compliance. In fact, a class-action lawsuit (Doe v. GitHub) was filed alleging that GitHub Copilot violated open-source licenses by regurgitating licensed code snippets without preserving license notices. As of early 2024, a judge dismissed some claims but allowed others to proceed, so the legal questions are still unresolved.

Vendors are responding: in late 2023 Microsoft announced a Copilot Copyright Commitment – essentially an indemnification pledge. MS committed that if a customer is sued for copyright infringement due to using Copilot’s output, Microsoft will defend them and pay any judgments, as long as the customer used the AI within prescribed guidelines. This was explicitly to address customers’ IP concerns about generative AI. It doesn’t erase the risk of litigation, but it shifts it to the provider in those cases.

Other companies like Tabnine highlight that their models are trained exclusively on permissively licensed code and data the user provides, to avoid IP entanglements.

The conversation around ethical standards is changing, focusing more on transparency. People are suggesting that AI should indicate when it uses exact text from its training sources. There are still legal concerns, especially when it comes to longer pieces of AI-generated code that might look like protected work. Right now, using closed-source tools is relatively safe, but sharing AI-generated code could need additional licensing considerations.

Bias and Fairness

While bias is often discussed in the context of AI decision-making (like biased hiring algorithms), it can also creep into coding tools.

Bias in training data means the AI might over-represent certain languages, frameworks, or styles. For example, if 90% of the code an AI saw for web apps was written with a particular library, it may always suggest that library (even if there are other viable options). This could stifle diversity of tools or propagate outdated practices.

More seriously, if the training data included code with biased or offensive identifiers/comments, the AI might even suggest culturally insensitive names or insecure patterns that reflect historical biases (e.g., using “master/slave” terminology in code, which many projects now avoid – an AI might unknowingly suggest it because it was common in older code). Also, AI might be biased towards English language comments and struggle with code/comments in other spoken languages, which could disadvantage non-English-speaking developers.

Another angle is bias in who benefits: junior developers and those without strong mentorship may lean heavily on AI and potentially not develop skills as quickly (as discussed earlier), whereas senior devs might use AI more judiciously. This could widen skill gaps if not managed. There’s also the concern of reinforcing dominant coding styles: if an AI always suggests code in a certain pattern, alternative creative solutions might be overlooked, leading to a homogenization of coding style that isn’t always for the better.

Mitigating bias involves curating training data and possibly fine-tuning models on diverse and up-to-date code. Some research is looking at “debiasing” code models – for instance, ensuring the AI is aware of security best practices (so it doesn’t favor the insecure examples it learned). Users of AI coding tools should remain critical: just because the AI suggests a solution, it isn’t necessarily the optimal or modern one – it could be reflecting an outdated approach that was common in 2018 but not today.

Responsibility and Accountability

If an AI writes a chunk of code that later causes a major bug or security breach, who is accountable?

Ethically, the human developer who accepted the code is still on the hook – you can’t “blame the AI” in a formal sense (and certainly not in a court or customer SLA). However, this dynamic might complicate team processes. For instance, code review might need to specifically call out AI-generated code for extra scrutiny. Some organizations might require developers to label whether code was AI-assisted.

There’s also an emerging idea of AI liability: if an AI tool consistently suggests bad code that causes damage, could the tool provider be liable? This hasn’t been tested much legally, but providers often have terms of service disclaiming liability and emphasizing that the user must oversee the output. Regulatory bodies may in the future impose requirements on AI in safety-critical soft – e.g., autonomous vehicle software.

Compliance and Standards

In regulated industries, there may be standards evolving around AI usage. For example, ISO or IEEE might create standards for software developed with AI assistance, or documentation requirements to log when AI was used (for audit purposes).

The EU AI Act, one of the first comprehensive AI regulations (still being finalized as of 2024/2025), might categorize code generation AI under certain risk categories if used in products that affect humans (though most coding tools likely fall under low-risk). However, if AI generates code for medical devices or aerospace, indirectly this becomes a high-stakes use. Companies might voluntarily impose stricter QA on AI-involved code in such domains.

Privacy and Data Protection

Beyond just the code, consider that AI models might inadvertently expose data seen during training or prompts. There was controversy when users found Copilot sometimes produced chunks of verbatim code from public repos – imagine if any of those had personal data or API keys (which sometimes end up mistakenly committed).

One analysis found that in a sample of 20K GitHub repos using Copilot, 6.4% had at least one secret leaked in code, a higher incidence than in repos overall (4.6%). This suggests that using AI may correlate with more accidental inclusion of secrets – possibly because developers trust the AI’s suggestion which might include something that looks like a dummy credential but is actually a pattern from training.

It’s an interesting statistic pointing to how AI can amplify mistakes if not careful. On the privacy side, if developers paste proprietary code into a public AI (like ChatGPT free version), that code might then become part of the model’s knowledge (OpenAI says they don’t use API data for training if you opt out, but the free web version inputs could be used). This has led companies like Samsung to ban engineers from using ChatGPT after some sensitive code was reportedly leaked via it.

The solution many enterprises are pursuing is deploying private instances of AI models.

For example, OpenAI offers Azure-hosted instances where the model doesn’t learn from your data and all data stays within Azure’s compliance boundary. Some firms are even training their own models on internal code (using frameworks like Hugging Face Transformers with Code Llama, etc.) so that they have full control and no external data sharing. This trend may accelerate – we might see each large company having its own “in-house AI developer assistant” that’s tuned to its code and rules, living behind its firewall. That addresses privacy but is resource-intensive and requires expertise.

Market Trends and Future Outlook

“I’ve heard people say this is going to replace a developer, and that anybody can be a developer. I don’t see that yet. What I see is that an experienced dev is going to become an optimized developer because these tools and capabilities give them access to things much faster than they’ve ever had before”. – Merim Becirovic, managing director at Accenture

Surging Investment and Startup Activity

The past years have seen massive investment in AI devtools.

Venture capital is pouring into startups that promise to reinvent coding with AI. For instance, in 2024, Magic.dev raised $320 million with the ambitious goal of building a “superhuman software engineer” AI. Another startup, Cognition, touted releasing Devin, “the first AI software engineer”. While some of these claims are marketing hyperbole (as industry analysts note, these AI “engineers” are essentially advanced assistants, not fully autonomous coders), the money flowing in is real.

Menlo Ventures reported that enterprise spending on generative AI applications (across domains) jumped almost 8× from 2023 to 2024 (from ~$600M to $4.6B).

Within that, code-focused tools are a leading category. GitHub Copilot itself reached a $300 million annual revenue run-rate in under two years since launch, validating strong willingness to pay for AI dev productivity. Big tech companies are not sitting idle either: Microsoft’s multibillion-dollar partnership with OpenAI is in part to secure dominance in this space, Amazon has CodeWhisperer and is investing in code-centric models, Google is integrating its PaLM/Codie models into Google Cloud Code Assist, etc.

The trend points to fierce competition and rapid improvements. We’re likely to see consolidation too – e.g., smaller players with unique tech (like DeepCode was acquired by Snyk) might get bought by larger DevOps companies wanting to add AI capabilities.

Enterprise Adoption and Spending

As noted, many enterprises are testing the waters or already diving in.

A global McKinsey survey in mid-2024 found that 30% of organizations had at least one AI use-case in software development in production, and many expect to increase investment in the next few years (hypothetical stat, but aligns with qualitative trends). MV’ analysis showed companies on average identified 10 different use-cases for generative AI in their business, and code copilots were the top use-case with 51% adoption.

Not only tech firms, but banks, retailers, and manufacturers are adopting AI to help their internal software teams. For example, Bank of America created an internal AI assistant to help developers with COBOL and Java code maintenance on legacy systems.

One notable trend is that many companies are starting with AI pair programming for internal tools and infrastructure code, where the risk of mistakes is lower, before moving to customer-facing product code.

Additionally, enterprises are big on governance: expect increasing demand for features like audit logs of AI suggestions, the ability to turn off certain capabilities (like not allowing AI to generate code that calls external APIs unless approved), etc.

Improving AI Capabilities (Technical Outlook)

On the technical front, AI models for code are rapidly improving.

OpenAI’s GPT4 was a leap in handling more complex instructions compared to GPT-3.5. Google’s Gemini model, rumored to be strong in code generation, is expected to be a new competitor. Meta released Code Llama (an open-source code-specialized LLM) which allows the community and enterprises to fine-tune and deploy their own coding AIs without needing OpenAI.

We can anticipate that models will get better at understanding larger contexts – e.g., instead of being limited to a few hundred lines, an AI might ingest an entire project structure (thousands or millions of lines) and reason about it. This would make it far more useful for architectural suggestions, multi-file changes, and understanding the implications of code edits across a codebase.

Another anticipated advancement is multimodal AI for coding: models that can take not just text input, but also other modalities – for instance, a GUI design image and generate code for it (some early demos already show “draw a wireframe on a napkin, AI generates the HTML/CSS/JS for it”). Or taking a database schema and generating API code. This could streamline many aspects of development (think of designing in Figma and getting production-ready React code, drastically shortening front-end development time for certain UIs).

Integration into Dev Toolchains

In the near future, AI features will be standard in IDEs and developer platforms.

Microsoft has already announced plans for deeper integration – e.g., Visual Studio’s IntelliCode is evolving into an AI code companion that can understand your entire solution. GitHub is working on Copilot CLI for command-line automation and Copilot for Docs to query documentation.

We will likely see version control systems with AI (imagine your git diff is accompanied by an AI analysis: “These changes introduce a new dependency; here’s a summary of functions added…”). Continuous integration could have AI steps (“AI code reviewer” that blocks a merge if certain anti-patterns are detected). Even project management tools might use AI to estimate tasks by analyzing code complexity.

Developer Skillset Evolution

The definition of a software developer may shift.

Prompting and AI orchestration could become key skills – knowing how to effectively instruct an AI to get the desired code, how to verify and improve AI output, etc. Some are calling this “Software Developer 2.0” where the developer’s job is as much about guiding AI as coding manually.

Educational curricula are starting to include AI pair-programming best practices. On the flip side, interview processes might change: if AI can help solve programming puzzles, companies might put less emphasis on algorithm trivia and more on design and understanding (or they’ll change interview questions to be AI-proof, which could be interesting). There might also be new roles like AI Code Coach – someone in a large team who is an expert in how the AI behaves and helps tune it or set team-specific configurations.

Open Source Impact

Open source software could be affected – if AI generates a lot of code, do we see more code being contributed by bots?

Already, there have been instances of AI-generated pull requests on GitHub for trivial issues (with mixed reception). Open source maintainers might start using bots to handle simple issues (like adding a required config or updating dependencies) via AI. However, open source licenses themselves may adapt – we might see new licenses that address AI training (some projects already choose licenses that restrict training data usage).

Economic and Job Market Trends

In the broader economic sense, if AI makes developers significantly more productive, we might see a deflationary effect on the cost of software development.

This could spur more startups (since MVP cost is lower) and more software projects in non-tech industries (since they can do more with the same budget). Conversely, for developers, it means the job might change but likely not vanish – history with tools (IDEs, higher-level languages, etc.) shows that better tools increase demand for software and expand what we build. There will be a period of adjustment where developers who master AI tools will have an edge.

In fact, job postings are already listing “Experience with AI coding tools (Copilot, etc.)” as a desirable skill. Companies want to ensure new hires are open to using these productivity boosters.

Future Vision – AI-Augmented Software Lifecycle

If we look a few years ahead, one can envision a software development workflow heavily infused with AI at every step.

- Requirement Gathering. Use AI to chat with stakeholders, extract insights, and automatically summarize feature requests into actionable inputs.

- Planning & Design. Let AI generate initial user stories, technical specs, or architectural suggestions based on the gathered requirements.

- Code Generation. AI produces up to 80% of the feature’s code, with human developers reviewing and refining the implementation as needed.

- Testing & Monitoring. Automatically generate unit and integration tests. AI can also assist with runtime monitoring and basic anomaly detection (AIOps).

- Ongoing Maintenance. Deploy AI agents to proactively create pull requests for dependency updates, bug fixes, or performance improvements.

Throughout this, the human developers are in charge of reviewing, making high-level decisions, and handling the truly novel or complex problems. This could lead to a world where release cycles are extremely fast – imagine continuous delivery pipelines where AI fixes things on the fly and developers just merge those changes after a quick review.

Conclusion

As models grow smarter and more integrated, AI will become a core part of the dev lifecycle. It won’t replace developers but will amplify their abilities. With thoughtful adoption, AI can help teams build better software – faster, smarter and more responsibly.

👉 Access AI Developers Who Know How to Prompt and Ship

Sources

- Productivity impact of AI coding tools (GitHub)

- Adoption statistics (Stack Overflow dev survey, Opsera)

- GitHub Copilot case studies and research

- AI code quality and maintainability studies (GitClear whitepaper)

- Expert warnings on AI pitfalls (TechTarget, Bee Techy)

- Security concerns (Red Hat)

- Privacy and data leakage stats (GitGuardian)

- Tool features and comparisons (Tabnine blog, Amazon docs)

- Enterprise and market analyses (Menlo Ventures report, Pragmatic Engineer)

- Microsoft and industry announcements on AI policy (Microsoft copyright commitment)

Note: We’ve spent a lot of time and effort creating this research. If you intend to share or make use of it in any way, we kindly ask that you include a backlink to our website – EchoGlobal.